optical image sensor

- time:2025-08-14 17:36:57

- Click:0

Capturing Light: The Essential Guide to Optical Image Sensors

Look around. That stunning sunrise photo on your phone, the crystal-clear security footage at the supermarket door, the intricate details in a medical scan, even the self-driving car navigating traffic – they all rely on an unseen hero: the optical image sensor. This crucial piece of image sensor technology is the electronic eye of the modern world, silently transforming patterns of light into the digital signals that power our visual experiences and intelligent systems. Understanding how they work unlocks a deeper appreciation for the devices shaping our lives.

At Its Core: Light Becomes Data

An optical image sensor is fundamentally a semiconductor device designed to convert incident light (photons) into an electrical signal (electrons). It’s the digital equivalent of film, capturing the intensity and, in color sensors, the wavelength of light hitting its surface. The sensor is composed of millions of individual light-sensitive elements called pixels (picture elements). Each pixel acts like a tiny bucket, accumulating electrical charge proportional to the amount of light it receives during an exposure period. This analog charge is then read out, converted into a digital value (through an Analog-to-Digital Converter - ADC), and processed to form the final image or video frame.

The Two Titans: CCD vs. CMOS

Two primary technologies have dominated the optical image sensor landscape: Charge-Coupled Devices (CCD) and Complementary Metal-Oxide-Semiconductor (CMOS) sensors. While both achieve the same fundamental goal, their architectures differ significantly:

- CCD Sensors: Traditionally known for high image quality and very low noise, especially in low-light conditions. CCDs transfer the charge accumulated in each pixel sequentially through the sensor array to a single output amplifier for conversion. This elegant process minimizes variations between pixels but requires more power and specialized manufacturing. Historically favored for scientific, medical, and high-end photography applications where ultimate quality was paramount, CCD technology has increasingly yielded ground to its more versatile counterpart.

- CMOS Sensors: The dominant force in today’s market. Unlike CCDs, CMOS image sensors incorporate amplification and often Analog-to-Digital Conversion (ADC) circuitry directly at each pixel (active pixel sensor - APS) or column. This allows for parallel readout, enabling much faster frame rates, significantly lower power consumption, and easier integration with other processing electronics on a single chip. The primary trade-off historically involved higher noise levels (noise floor) and potentially lower light sensitivity (quantum efficiency) compared to high-end CCDs. However, relentless advancements in CMOS sensor design and fabrication processes (like backside illumination - BSI) have dramatically closed these gaps. Modern CMOS sensors now offer exceptional image quality, rivaling or surpassing CCDs in many areas, while providing crucial benefits in speed, power efficiency, cost, and miniaturization.

Key Metrics Defining Performance

Evaluating an optical image sensor involves several critical specifications:

- Resolution: Defined by the number of pixels (e.g., 12 Megapixels). Higher resolution captures finer detail but can impact low-light performance and require more processing/storage.

- Pixel Size: Measured in micrometers (µm). Larger pixels generally capture more light, improving performance in low light (sensitivity) and dynamic range, but physical size constraints often favor smaller pixels for higher resolution in compact devices. Backside Illumination (BSI) technology flips the sensor to route wiring below the light-sensitive layer, significantly improving light capture for small pixels.

- Dynamic Range: The ratio between the brightest and darkest details a sensor can capture simultaneously in one exposure. Crucial for scenes with both bright highlights and deep shadows.

- Low-Light Performance / Sensitivity: How well the sensor functions in dim environments. Linked to pixel size, BSI, and sensor architecture. Measured metrics include Quantum Efficiency (QE) and signal-to-noise ratio (SNR).

- Frame Rate: The number of complete images the sensor can capture per second (FPS). Vital for video, action photography, and machine vision applications.

- Rolling vs. Global Shutter: Rolling shutter scans the sensor sequentially (line-by-line), which can cause motion blur or distortion with fast movement. Global shutter exposes all pixels simultaneously, freezing motion perfectly but requiring more complex design and potentially higher cost/power.

Where Image Sensors See the World

The applications for optical image sensors are vast and continuously expanding:

- Consumer Electronics: Ubiquitous in smartphones, digital cameras, webcams, tablets, drones, and virtual/augmented reality (VR/AR) headsets. CMOS sensors absolutely dominate here due to performance, cost, and power requirements.

- Automotive: Essential for Advanced Driver-Assistance Systems (ADAS) and autonomous driving. Cameras provide lane departure warnings, adaptive cruise control, collision avoidance, traffic sign recognition, and surround-view monitoring. These sensors demand high reliability, wide dynamic range, and robust performance in extreme lighting conditions.

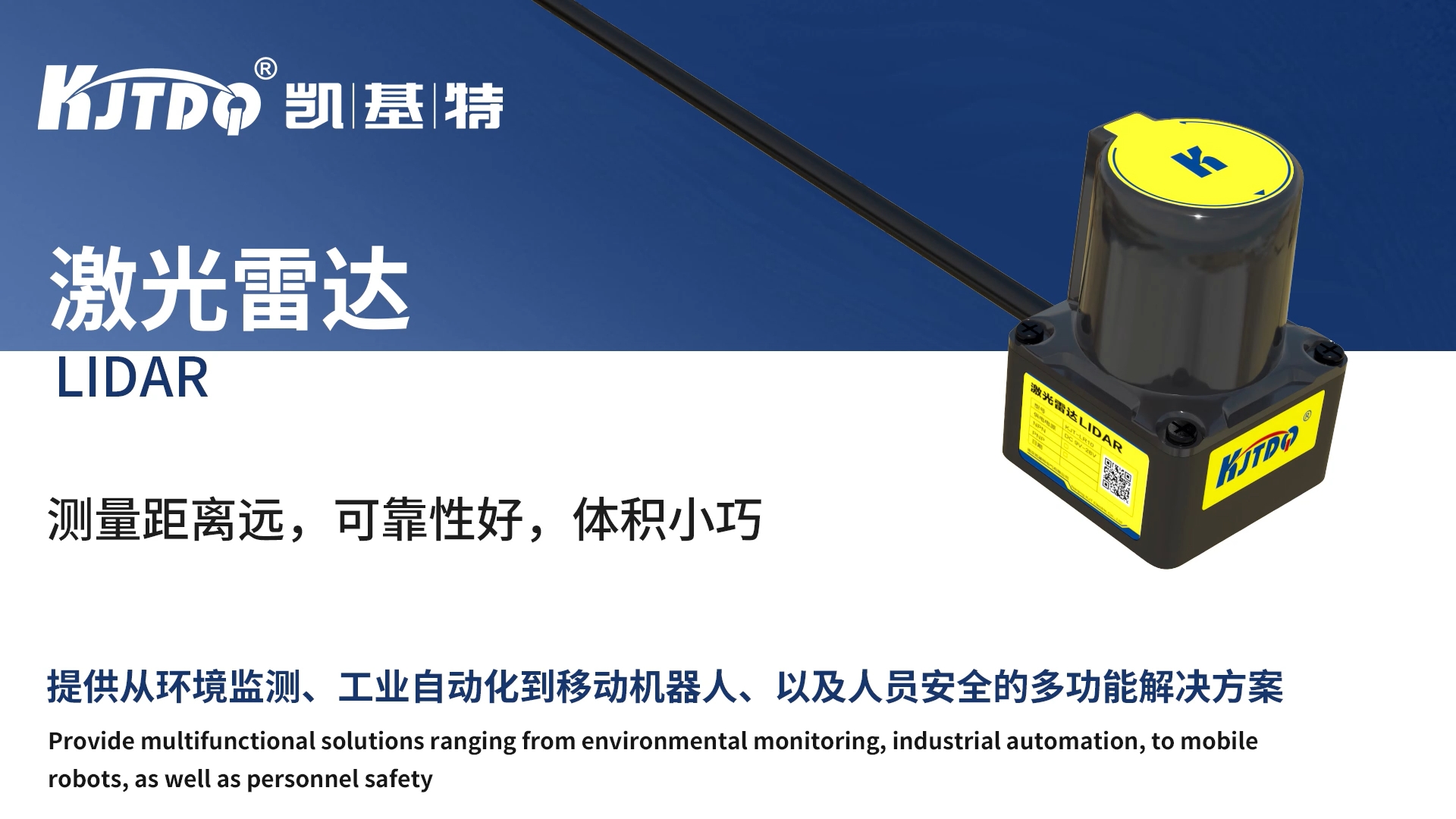

- Industrial Automation & Machine Vision: Critical for robotic guidance, automated inspection (defect detection, measurement, sorting), barcode reading, and process control. Speed, resolution, precision, and specialized capabilities (like SWIR - Short-Wave Infrared) are key.

- Medical & Scientific Imaging: Enabling high-resolution diagnostics in endoscopy, dentistry, microscopy, X-ray digital radiography (using scintillators), life science research, and astronomy. Requires exceptional image quality, sensitivity, and sometimes specific spectral responses beyond visible light.

- Surveillance & Security: Powering IP cameras, video doorbells, and security systems. Requirements include good low-light performance, wide dynamic range, resolution, and reliability for continuous operation.

- Emerging Fields: Biometrics (facial recognition, fingerprint scanning), gesture control, agricultural monitoring, and environmental sensing.

The Horizon: What’s Next for Optical Sensing?

Optical image sensor technology is far from static. Exciting trends include:

- Computational Photography: Leveraging multiple sensors, sophisticated algorithms, and AI to surpass the physical limitations of a single sensor, enabling features like enhanced low-light imaging (night mode), multi-frame HDR, computational bokeh, and super-resolution.

- 3D Sensing: Using technologies like Time-of-Flight (ToF) sensors (which measure light travel time) or structured light to add depth perception for facial recognition, AR/VR interaction, and spatial mapping.

- Event-Based Vision: Neuromorphic sensors, inspired by biological retinas, respond asynchronously to changes in brightness, offering ultra-low latency, high dynamic range, and minimal data output – ideal for high-speed motion analysis and power-sensitive edge AI.

- Expanded Spectral Range: Developing sensors sensitive beyond visible light (UV, NIR, SWIR) for diverse applications like material identification, agricultural health monitoring, and seeing through obstacles.

- Stacked and Hybrid Sensors: Integrating multiple layers (pixels, processing, memory) within the sensor package for faster performance, higher resolution, and smarter capabilities at the edge.

From transforming photons into cherished memories on our smartphones to enabling machines to perceive and interact with their environment, optical image sensors are foundational components driving technological progress. The relentless innovation in resolution, sensitivity, speed, and intelligence ensures that this invisible technology will continue to illuminate and shape our future in profound ways.