check

check

check

check

check

check

check

check

check

check

Imagine a self-driving car navigating a torrential downpour. Rain lashes the windshield, obscuring vision. Milliseconds matter for identifying a pedestrian stepping off the curb. Where does the crucial analysis happen? Increasingly, the answer lies right at the source, within the sensor itself or incredibly close to it. This paradigm shift, known as Near Sensor Computing (NSC), is fundamentally changing how we process data in our hyper-connected world, offering unprecedented speed, efficiency, and intelligence.

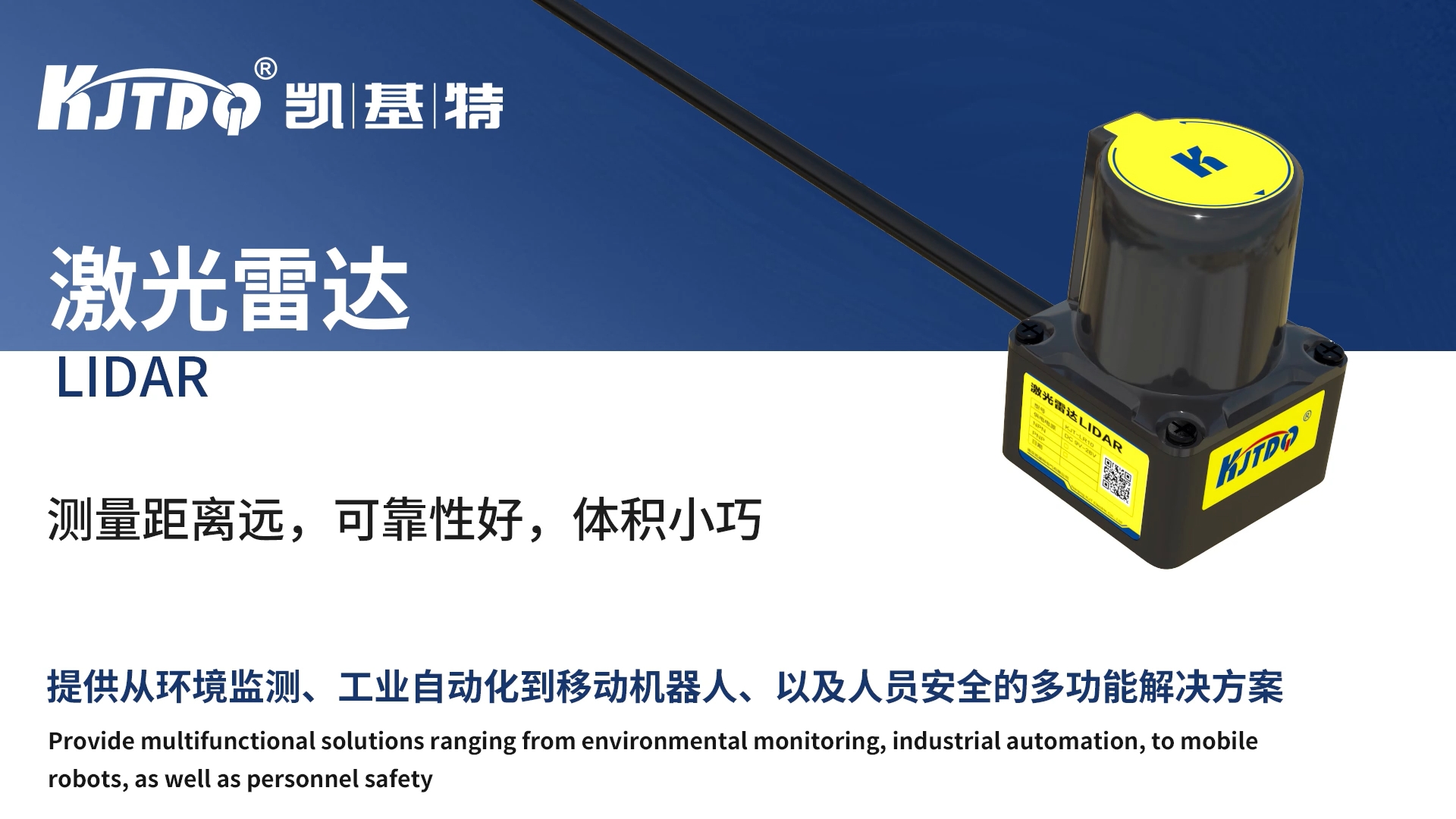

Traditionally, sensors – be they cameras, microphones, LiDAR units, or temperature gauges – acted as simple data collectors. They captured raw information and sent vast streams across networks to powerful, centralized cloud servers or distant data centers for processing, analysis, and decision-making. While cloud computing offers immense power, this model suffers from inherent drawbacks for latency-sensitive and bandwidth-hungry applications: significant delays (high latency), massive network bandwidth consumption, potential privacy vulnerabilities, and reliability issues if connectivity falters.

Near Sensor Computing flips this script. It involves moving computation resources physically closer to where data is generated – embedding processing capabilities within the sensor module itself, on the device containing the sensor (like a smartphone or robot), or in a nearby gateway or dedicated edge server. This proximity, the defining characteristic of sensor proximity processing, unlocks transformative advantages:

The transformative impact of Near Sensor Computing is already visible across diverse sectors:

The future of Near Sensor Computing is intrinsically linked with advancements in Artificial Intelligence. Embedding lightweight, specialized AI models directly onto sensor hardware or nearby edge processors – known as TinyML – is accelerating rapidly. This allows for even more sophisticated local inference, such as complex anomaly detection, natural language understanding, or advanced computer vision tasks, pushing the boundaries of what’s possible at the hyper-local edge.

Challenges remain, of course. Designing efficient, low-power processors capable of handling demanding tasks in constrained sensor environments is complex. Developing and managing software distributed across vast numbers of edge devices requires new tools and paradigms. However, the relentless drive towards real-time intelligence, bandwidth optimization, and enhanced privacy ensures Near Sensor Computing is not just a trend, but the cornerstone of the next generation of intelligent systems. By processing data where it’s born, we unlock speed, efficiency, and insights previously unimaginable, truly empowering the Intelligent Edge.