optical position sensors in robotics

- time:2025-08-16 00:00:14

- Click:0

Unlocking Robotic Precision: The Essential Role of Optical Position Sensors

Forget clunky gears or imprecise potentiometers. Today’s sophisticated robots, performing delicate surgeries, assembling intricate electronics, or navigating complex warehouses, rely on a different kind of sense: vision through light. At the heart of this capability lies a critical technology enabling accurate perception of movement and location: optical position sensors. These silent sentinels provide the real-time feedback that transforms robotic systems from rigid machines into adaptable, precise, and intelligent actors.

Understanding the Optic Nerve of Robotics

At its core, an optical position sensor determines the location, displacement, or orientation of an object relative to a reference point using light. Instead of physical contact, it employs various light-based techniques to achieve this measurement:

- Triangulation (Active): The sensor projects a focused beam of light (often laser or LED) onto the target surface. The reflected light is captured by a position-sensitive detector (like a photodiode array or a camera) at a known angle. By calculating the position where the reflected light strikes the detector, the sensor accurately determines the distance to the target. This principle is fundamental in laser displacement sensors.

- Time-of-Flight (ToF): The sensor emits a pulse of light and precisely measures the time it takes for that pulse to travel to the target and back. Distance is directly calculated using the speed of light. ToF technology is increasingly common in LiDAR systems for robot navigation and depth sensing.

- Optical Encoding: A precise pattern (like lines or slots on a disk or strip – the encoder scale) moves relative to a light source and detector(s). As the pattern moves, it modulates the light beam. An onboard processor counts these modulations and/or interprets unique patterns to determine position, speed, and direction with incredible accuracy. This forms the basis of both rotary and linear encoders.

- Image Analysis: Advanced vision sensors or cameras capture images of the target environment. Sophisticated software then analyzes these images to track features, identify objects, and deduce their position and orientation in 2D or 3D space.

Key Types Powering Robotic Movement

Several specific sensor types leveraging these principles are ubiquitous in modern robotic systems:

- Photodiodes/Phototransistors: Often used in simple reflective or break-beam sensors to detect presence/absence or proximity.

- Photodiode Arrays / Position Sensitive Detectors (PSDs): Core components in laser triangulation sensors. They output analog signals proportional to the centroid of the incident light spot, enabling high-speed, non-contact distance measurement.

- Optical Encoders:

- Incremental Encoders: Generate pulse signals as the encoder scale moves, indicating relative movement and speed. Absolute position requires a known starting point (homing).

- Absolute Encoders: Provide a unique digital code for every position within their range, instantly knowing their location on power-up without homing. Crucial for safety-critical robotic joints and positioning systems.

- Laser Displacement Sensors: Leverage triangulation to provide extremely accurate, non-contact measurements of distance, height, thickness, or vibration. Ideal for robotic quality inspection and micro-positioning tasks.

- Laser Trackers: Use precise laser beams and angular encoders to measure the 3D position of a retroreflector held by the robot or placed on a workpiece. Used for large-scale metrology and robot calibration.

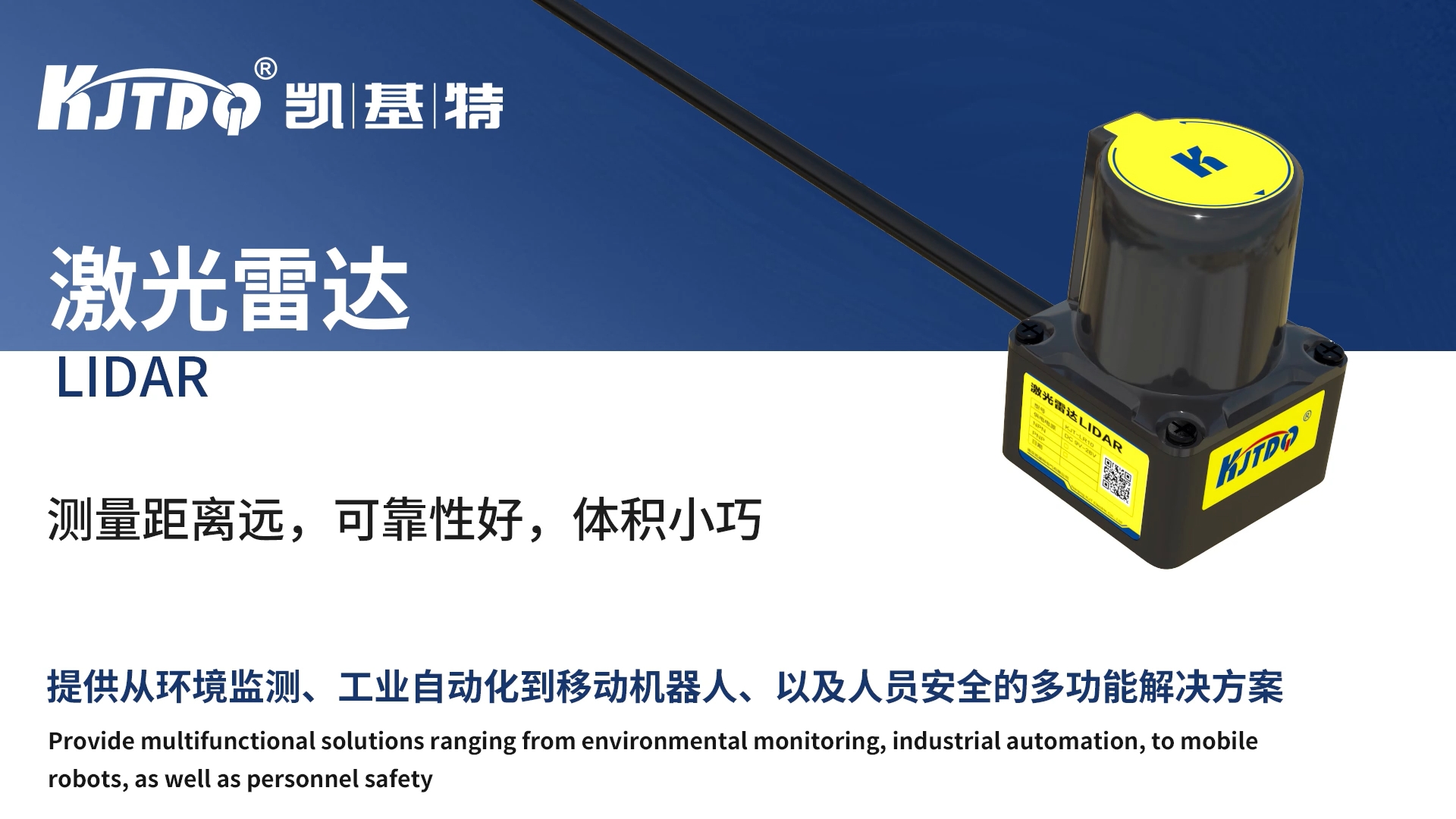

- LiDAR Sensors (Light Detection and Ranging): Emit rapid laser pulses across a field of view and measure their time-of-flight to create detailed real-time 3D point clouds of the environment. Essential for autonomous mobile robot (AMR) navigation and obstacle avoidance.

- Vision Systems (Cameras + Processing): While technically sensor systems, cameras combined with computer vision algorithms perform complex optical position and orientation sensing, vital for bin picking, assembly verification, and visual servoing.

Robotics Applications: Where Optical Precision Shines

The unique capabilities of optical position sensors make them indispensable across diverse robotic domains:

- Manufacturing & Assembly: Robots assembling smartphones or circuit boards rely on absolute encoders for precise joint positioning. Laser displacement sensors verify component placement height or detect microscopic defects on machined parts. Vision-guided robots locate parts on a conveyor.

- Material Handling & Logistics: AMRs navigating dynamic warehouse floors depend heavily on LiDAR and vision sensors for simultaneous localization and mapping (SLAM), obstacle detection, and path planning. Encoders confirm wheel movement and distance traveled.

- Medical Robotics: Surgical robots require absolute encoders in their joints for sub-millimeter accuracy and patient safety. Optical trackers monitor the position of surgical tools relative to patient anatomy for image-guided surgery. PSDs can provide fine position feedback in micromanipulators.

- Research & Laboratory Automation: Precise positioning for sample handling, microscopy stages, and experimental setups often employs linear optical encoders and laser interferometers for nanometer-level precision.

- Consumer & Service Robots: Vacuum robots use combinations of break-beam sensors for cliff detection and optical encoders on wheels for dead reckoning. Drones utilize optical flow sensors (a form of vision-based position sensing) for stable hovering.

Strengths: The Optical Advantage

Optical position sensors offer compelling benefits that drive their adoption in robotics:

- Non-Contact Measurement: Eliminates friction, wear, and mechanical hysteresis, ensuring long-term reliability and accuracy. Vital for high-speed or delicate applications.

- High Accuracy & Resolution: Capable of measuring minute distances (nanometers) and angular increments (micro-radians), far exceeding most contact-based methods.

- High Speed: Optical methods like ToF and encoding allow for very fast sampling rates, enabling real-time control of dynamic robotic movements.

- Versatility: Available in configurations for measuring linear or angular displacement, proximity, distance, and complex 3D positions.

- Immunity to EMI: Compared to some magnetic sensors, well-shielded optical sensors are generally less susceptible to electromagnetic interference common in industrial environments.

Challenges & Considerations

Despite their strengths, selecting and implementing optical sensors requires careful thought:

- Environmental Sensitivity: Dust, smoke, water, strong ambient light, or oil can interfere with light beams, degrading performance or causing failures. Sealing, protective housings, and careful environmental control are often necessary.

- Target Surface Dependence: Performance (especially in triangulation/reflection-based sensors) can vary with target surface color, reflectivity, texture, and transparency. Calibration for specific surfaces might be needed.

- Cost & Complexity: High-precision optical encoders or LiDAR systems can be significantly more expensive than simpler sensors. Integration with processing units adds complexity.

- Computational Load: Especially for vision systems and LiDAR, processing the large amounts of data generated demands significant computational power, impacting system design and cost.

- Calibration & Alignment: Precise installation and periodic calibration are often required to maintain accuracy, particularly for systems involving multiple sensors or triangulation.

The Future: Brighter, Smarter, More Integrated

The trajectory for optical position sensing in robotics points towards greater sophistication:

- Miniaturization: Smaller, lighter sensors enable deployment in compact or collaborative robots (cobots).

- Increased Resolution & Speed: Continuous improvements enhance precision and control capabilities for more demanding tasks.

- Sensor Fusion: Combining optical data with inertial sensors (IMUs), force/torque sensors, or other modalities provides a more robust and comprehensive understanding of the robot’s state and environment. This fusion is key to true autonomy.

- Embedded AI: Moving processing directly onto the sensor (“edge AI”) enables faster reaction times and smarter local decision-making (e.g., immediate object recognition on a vision sensor).

- Cost Reduction: Advancements in manufacturing (especially for MEMS-based devices